The HTTP protocol has contributed to the spread of the Internet. And now, the specification of its new version HTTP/3 (http version 3) is in progress. Draft version 20 has been released on 2019/4/23.

In this article, we will look at the characteristics of HTTP/3 based on the history of HTTP.

HTTP Specification Structure

There are two main elements in the HTTP RFC specification:

- Syntax

- Semantics

"Syntax" shows you how to communicate information to the other party efficiently via the network.

he rules for Cache and Keep-Alive, and the packet format indicating the placement of each data defined in Semantics are specified. The RFC specification of HTTP / 1.1 is RFC 7230.

Semantics shows the information that you tell the other.

It specifies the methods for requests such as GET, POST, CONNECT, and the response codes such as 200 (ok), header fields. There is RFC7231 as a specification of HTTP/1.1. If you want to check the method list and response code list, I think that the table of contents of this RFC is easy to read and understand.

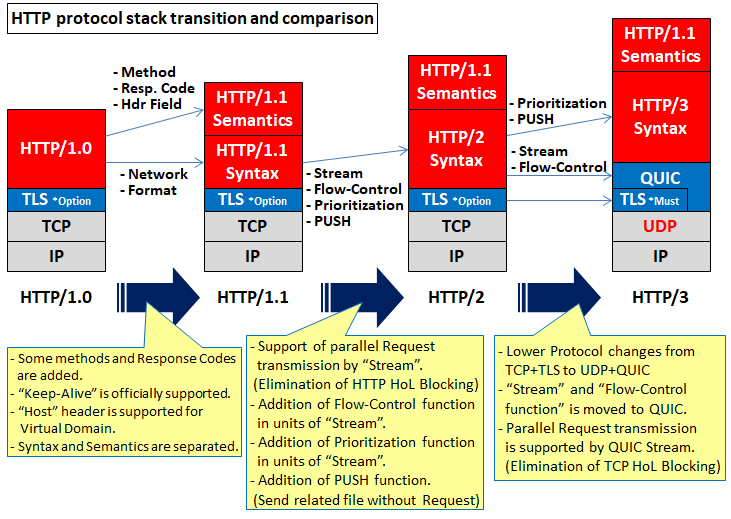

HTTP protocol stack transition and comparison

The following shows how the protocol stack and functions have changed from HTTP / 1.0 to HTTP/3.

Change from HTTP/1.0 to HTTP/1.1

HTTP/1.0 is specified in RFC1945 in 1996, when the Internet has become popular, and improvements were repeated with trial and error. HTTP/1.1 (RFC2068) was published in 1997 in the following year, and two revisions were made thereafter. The final version is the aforementioned RFC7230 and RFC7231.

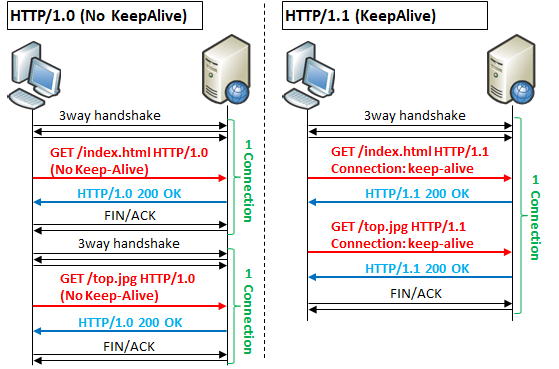

One of the big changes from HTTP/1.0 to 1.1 is the official support for "Keep-Alive". In HTTP/1.0, only one request could be made per TCP connection. HTTP/1.1 now allows multiple requests to be made "in order" on a single TCP connection.

Other improvements include the addition of methods and response codes, and virtual domain support with the "Host" header field.

Change from HTTP/1.1 to HTTP/2

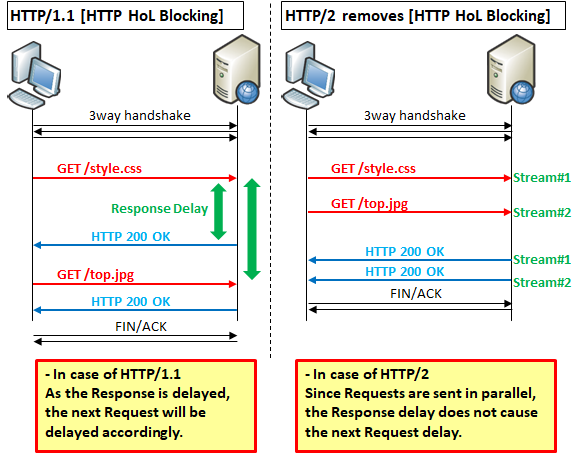

In HTTP /1.1, it became possible to send some requests "in order" in one TCP connection, but it was regarded as a problem that if one request is clogged, later requests will be delayed accordingly.

As described above, the event that "when one of the cues is delayed, it is also delayed when it is delayed" is generally called HoL (Head of Line) Blocking.

Because of this problem, browsers that comply with HTTP/1.1 often establish multiple TCP connections even if she can use Keep-Alive.

To solve this problem, HTTP/2 can now make multiple requests "in parallel" in one TCP connection. This mechanism is called "Stream".

The browser assigns one stream to each of several requests and sends them together. The web server identifies the streams and sends responses to each.

HTTP/2 Semantics are unchanged in HTTP/1.1. In other words, the methods, response codes, and the meaning of the header field have not changed. In HTTP/2, the Syntax part such as streams has been greatly improved.

Other improvements about HTTP/2 include implementation of Flow-Control and prioritization control at the HTTP level and PUSH feature is implemented, which allows the server to send files considered necessary (such as linked .css or image files) without request from the client.

HoL Blocking problem, again

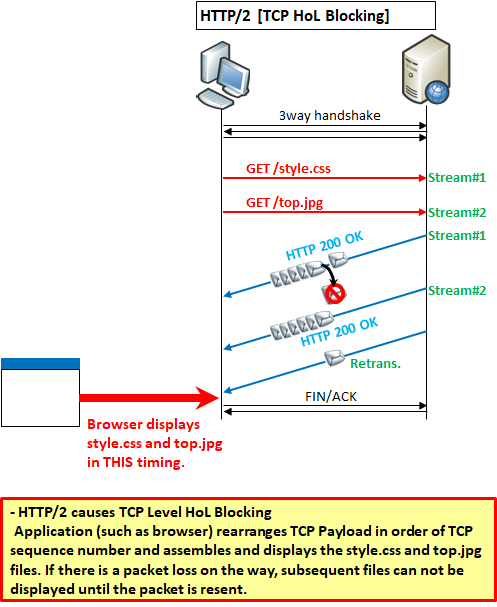

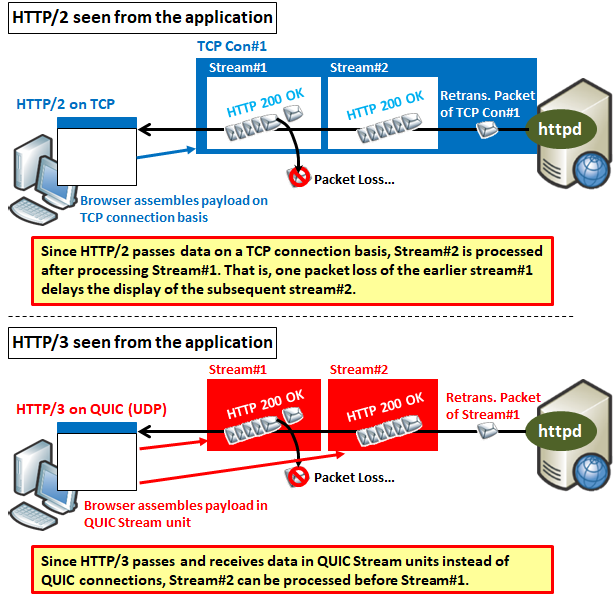

However, even with this HTTP/2, it was found to be caught in the HoL Blocking.

Since HTTP/2 is processed on TCP in the first place, when a segmented TCP payload is assembled on the client side, it is eventually rearranged in the order of TCP sequence numbers, so if one packet is missing, it can not process subsequent packets.

This is the problem of library for TCP/IP communication. As a result, even if multiple requests are issued in parallel by the stream, even if all responses after one packet loss are complete, the responses can not be displayed until the lost packet is resent.

In other words, even though the HTTP level HoL Blocking was resolved, the TCP level HoL Blocking was not resolved .

So, many browsers are implemented to establish multiple TCP connections for access to a single web server, just like in HTTP/1.1.

On the other hand, at the same time, Google had found out in his test that "TCP 3way handshake or TLS negotiation are around trip many times, but this actually affects the Web display speed greatly. Reducing round trip time (RTT) and number of round trips are more effective than increasing bandwidth!".

Google developed SPDY protocol and QUIC protocol, reflecting that idea. (I'm off the main stream of this story, but TLS 1.2 to 1.3 Changes are also following this trend.)

With these backgrounds in place, a stream of TCP removal was created, and it was decided that HTTP/3 works over UDP (exactly QUIC) .

Change from HTTP/2 to HTTP/3

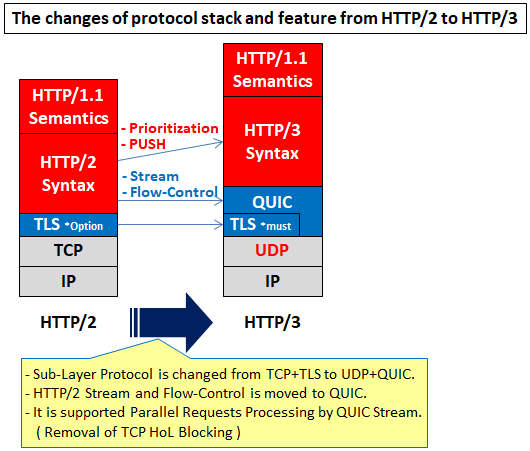

HTTP/3 has been decided to operate on a new protocol called QUIC. QUIC works over UDP. HTTP/3 Semantics has not changed from HTTP/1.1 or HTTP/2.

So the main change of HTTP/3 is the Syntax part, and the major elements are "HTTP/2's Transition from TCP+TLS to UDP+QUIC and adaptation accordingly to that".

The Stream and Flow-Control are no longer available from HTTP/3, and are moved to the QUIC.

As a result, most of the functions possessed by TCP+TLS can be provided by UDP+QUIC.

- TCP connection -> QUIC Connection-ID

- TCP Sequence Numbers(ack/retransmission) -> QUIC Packet Numbers

- TCP Flow-Control

(Window) -> QUIC Flow-Control (Window)

The following is expected due to the UDP.

Speed improvement by removing TCP 3way Handshake

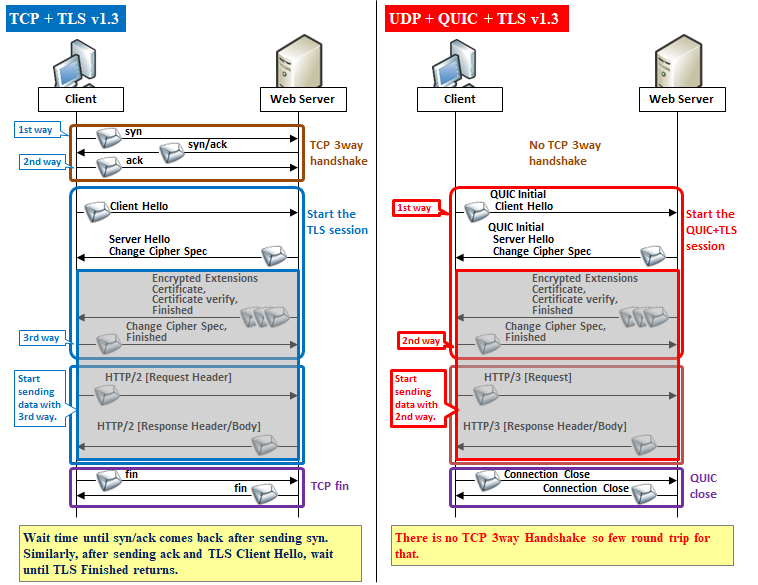

As mentioned above, reducing the number of communication round trips is more effective for speeding up than expanding the bandwidth in these days. The number of round trips can be reduced by eliminating TCP 3way Handshake.

Below is a comparison of "TCP + TLS v1.3" and "UDP + QUIC + TLS v1.3".

The figure above shows 1-RTT, which uses one round trip to establish a QUIC session, but when the session is resumed, a method called 0-RTT, which sends app data (HTTP/3 etc.) together with a resume message, is also available. However, this is somewhat less secure, so it is up to the application composer to use or not.

Solution of TCP HoL Blocking

The data is passed from QUIC to the application (web server) per QUIC stream unit (instead of QUIC connection unit).

Roaming at client IP change

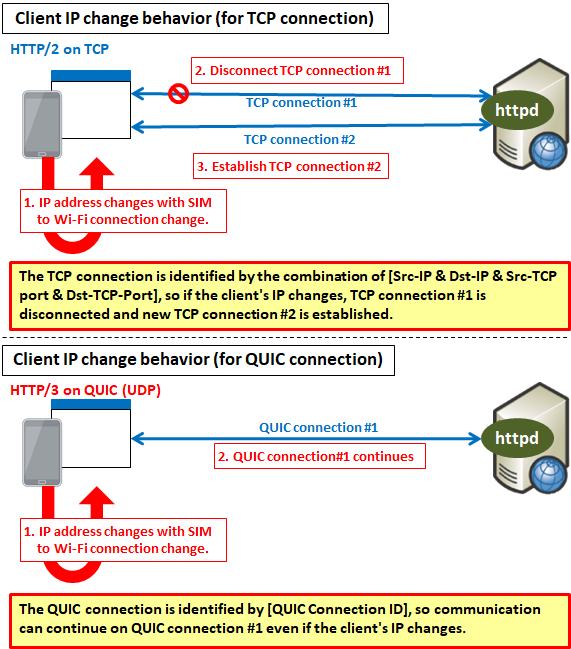

Switching from SIM to wireless (or vice versa) is often used as a usage scene on Smart Phones. At this time, the IP of the Smart Phone changes. In the case of TCP, you have to reestablish the TCP connection. (Since TCP connection is identified by the combination of source/destination IP/port number)

However, in the case of QUIC using UDP, the connection between the client and server is identified by [QUIC Connection ID], so the connection continues even if the IP changes.

Other features

HTTP/3 also has the Prioritization-Control function. (This is the feature added with HTTP/2 and is inherited by HTTP/3.)

A client (browser, etc.) tells the server "What priority should you respond to in parallel requests".

The server can respond with this request. However, it depends on the server side, and he can ignore it at the worst.

There is also the Flow-Control function. This is almost the same as TCP Flow-Control, and it is the method to streamline communication by passing the amount of acceptable buffer to the other party (Sliding Window) on a timely basis.

Flow-Control is used on per both QUIC connection units and QUIC stream units.

コメント